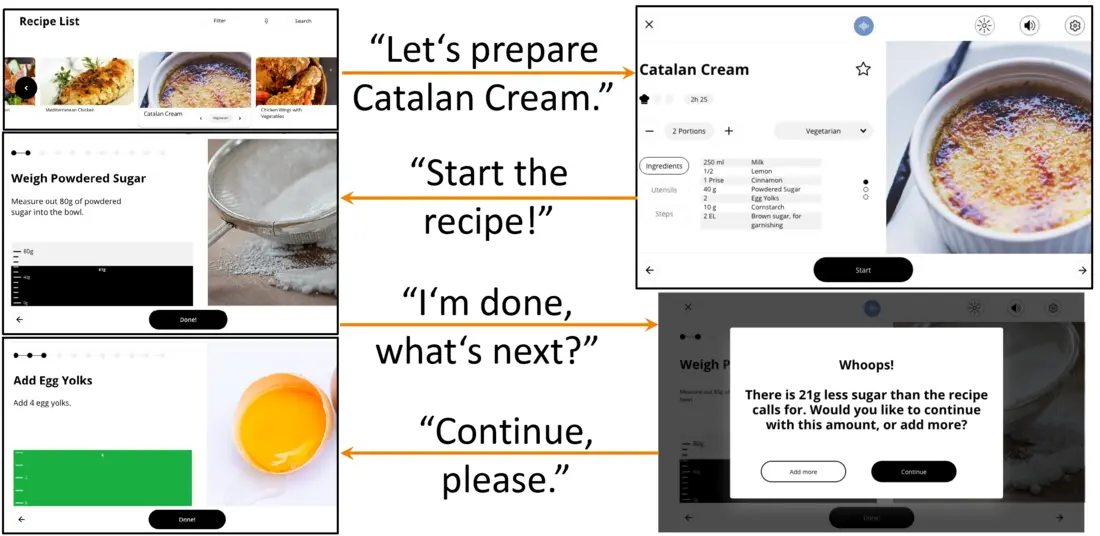

Multi-modal interaction options offer a high degree of accessibility, novelty and user-friendliness for smart kitchen devices.

As part of the UX design master project Natural User Interfaces in summer 2021, we present the design and prototype implementation of such a multi-modal interaction:

Guided cooking with a smart kitchen appliance that, in addition to touch gestures, also accepts user input in the form of speech and has a voice output. This means that nothing stands in the way of operating a smart kitchen device, even if your hands are dirty or not free at the moment.

As part of the project, a paper was written that was accepted at the ICNLSP 2021: https://aclanthology.org/2021.icnlsp-1.30

The code for the prototype is available on GitHub at the following link: https://github.com/VoiceCookingAssistant/Audio-Visual-Cooking-Assistant

![[Translate to English:] Der Prototyp besteht aus drei Komponenten: State Machine Frontend, Middleware Backend und Logical Backend](/fileadmin/_processed_/e/5/csm_Audio_Visual_Recipe_Guidance_Architecture_846a195326.webp)

![[Translate to English:] Logo Akkreditierungsrat: Systemakkreditiert](/fileadmin/_processed_/2/8/csm_AR-Siegel_Systemakkreditierung_bc4ea3377d.webp)

![[Translate to English:] Logo IHK Ausbildungsbetrieb 2023](/fileadmin/_processed_/6/0/csm_IHK_Ausbildungsbetrieb_digital_2023_6850f47537.webp)