The use of artificial intelligence in medicine offers opportunities for more precise diagnoses and the relief of routine tasks. But what understanding of the technology do medical professionals need in order to develop the "right" level of trust in such systems? Are there ethically relevant repercussions from the use of AI in the relationship between doctor and patient? A project led by Technische Hochschule Ingolstadt (THI) and Katholische Universität Eichstätt-Ingolstadt (KU) is investigating such questions. Cooperation partners are Prof. Dr. Matthias Uhl (Professor for Social Implications and Ethical Aspects of AI) and Prof. Dr.-Ing. Marc Aubreville (Professor for Image Understanding and Medical Applications of Artificial Intelligence) from the THI and Prof. Dr. Alexis Fritz (Chair of Moral Theology) from the KU. The project entitled "Responsibility Gaps in Human-Machine Interactions: The Ambivalence of Trust in AI" is funded by the Bavarian Research Institute for Digital Transformation (bidt).

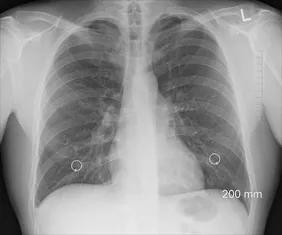

Monotonous activities are tiring and time-consuming for humans. The uniform evaluation of dozens of mammograms by experienced medical professionals, for example, can have the side effect of overlooking small details that are important for the diagnosis. The use of AI can potentially relieve the burden here and support human decision-makers. "The basic prerequisite is that the human experts must be able to trust the AI system. However, this can also lead to the doctor no longer critically reviewing the AI's decision," says Prof. Dr. Marc Aubreville. Because even such systems, which are already used in the medical field, are not infallible. That is why the procedures still include humans as the final decision-making authority.

But is this enough to establish serious accountability in the interaction between humans and machines? "The simple approach of integrating a human into the processes to correct misguided decisions is too naive," explains Prof. Dr. Alexis Fritz. Because just as people feel less responsible when they make joint decisions with other humans, studies show that this is also true when human decision-makers have been advised by a recommendation system. Prof. Dr. Matthias Uhl summarises the results of his own empirical studies as follows. "In various morally relevant decision contexts, we see that people still follow the recommendations of an AI even when we give them good reasons to doubt the system's recommendations, for example the quality of the training data."

"From an ethics perspective, it is too narrow to optimise AI systems only in purely technical terms. We therefore want to compare, for example, how it behaves when the AI does not make the first recommendation, but a doctor first makes a diagnosis, which is then only validated by the AI in the second step," Fritz describes. He will research the normative prerequisites for decision-makers to remain aware of their ability to act and to shift less responsibility to an AI. To this end, existing studies on the ethical challenges of the interaction between medical professionals and AI-based systems will be researched and analysed. The different concepts of responsibility and accountability will then be evaluated in medical practice through workshops and qualitative interviews with medical professionals and engineers. Among other things, the relationship between doctor and patient will play a role: Do doctors, for example, feel that their authority before patients is questioned when they consult a recommendation system?

In general, the project participants want to provide insights for the development of user-aware AI solutions. "Today, many solutions are designed without consideration of the subsequent decision-making processes. Based on our findings, we will therefore evaluate how algorithmic results and uncertainties can best be presented to the expert in charge. The goal here is to properly weight trust in the advice of recommender systems, especially in situations outside the norm where the algorithm may not provide the best hint," says Fritz.

![[Translate to English:] Logo Akkreditierungsrat: Systemakkreditiert](/fileadmin/_processed_/2/8/csm_AR-Siegel_Systemakkreditierung_bc4ea3377d.webp)

![[Translate to English:] Logo IHK Ausbildungsbetrieb 2023](/fileadmin/_processed_/6/0/csm_IHK_Ausbildungsbetrieb_digital_2023_6850f47537.webp)